How Natural Language Processing Can Help Eliminate Bias in Performance Reviews

How unconscious bias may play out in performance reviews has significant ramifications for diversity, equity and inclusion goals at corporations. Studies show that a manager’s unconscious bias in performance reviews can lead to unfair treatment of employees based on their age, sex, race or sexual orientation.

At Wayfair, the Boston-based e-commerce company that sells household goods, managers use natural language processing (NLP) to mitigate bias in performance reviews. When Wayfair began using the analytics tool in 2019, the company discovered that oftentimes there were differences in how development opportunities were described for women compared to men. The most prevalent example is that the word “confidence” appeared in the performance reviews of women with far greater frequency than those of men.

For KeyAnna Schmiedl, Wayfair’s Global Head of Culture and Inclusion, this discovery represented an opportunity to create behavioral change at the company. Schmiedl’s team began facilitating unconscious bias training in which they made the word “confidence” the focus of the sessions.

“We told everyone to listen out for this word whenever we were discussing women in calibrations across the company, and people picked up on it quickly because it’s such a common word,” Schmiedl says. “What we said to them is that the word itself is not bad, but how we’re using it is quite subjective. What confidence means to me and what I want to see when I say ‘improve your confidence’ or ‘improve your work’ are going to look different from another person’s perspective. So how can we be a bit more objective in the instructions that we’re giving to people to close that gap?”

How natural language processing helps reduce gender bias

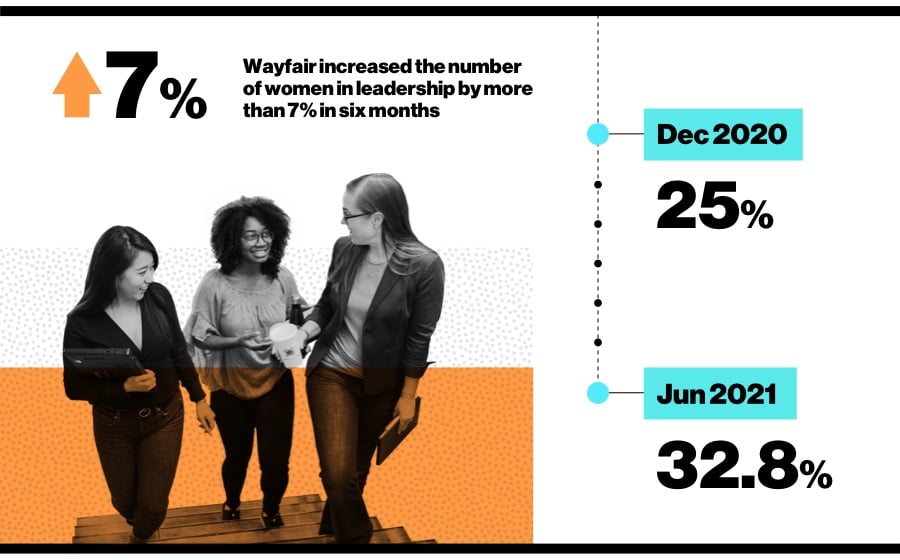

Through the first cycle of introducing this language awareness, Wayfair learned that women were rated higher overall than men on their performance reviews for the first time in many cycles. The company increased the number of women in leadership by more than 7% in six months, from 25% at the end of the fourth quarter of 2020 to 32.8% at the end of June 2021.

“We knew we were on to something, and that’s what told us to keep going and use the same process for other dimensions of diversity,” Schmiedl says.

Wayfair is a signatory of CEO Action for Diversity & InclusionTM, a coalition founded in 2017 on a shared belief that diversity, equity and inclusion is a societal issue, not a competitive one, and that collaboration and bold action from the business community—especially CEOs—is vital to driving change at scale.

At Wayfair, employees go through a 360-degree interview process. Once they have done their self-evaluations and compiled feedback from their peers and other stakeholders, a manager uses that information to write a performance review. The company’s Bias Analyzer tool, built into the same program where all review information is entered, analyzes for words that the company has assessed as potentially biased, using natural language processing analysis.

The tool highlights words and phrases that the company knows show up more often for certain groups of people. When words or phrases are flagged as showing bias, the tool points managers to resources to help them understand why certain language in particular contexts is unhelpful, and to help them identify more objective language. Managers are also given time to look across their reviews to assess if they are making equitable comments across their entire team.

Schmiedl emphasizes the importance of the context in which words are used, a nuance not easily captured in the NLP analysis.

“‘Confidence’ comes up across the board, whether we’re talking about race, ethnicity or gender,” says Schmiedl. “But the way that we’re talking about confidence is different. For women, it showed up more as an opportunity area. For racial and ethnic underrepresented groups, it showed up more as, ‘You seem very confident.’ So, the context in which we’re talking about the word really matters. And that’s what we try to orient people to—that these words themselves are not inherently bad.”

Applying technology to address racial bias

After running the NLP analysis for gender, the company decided to build a tool that analyzed bias through a racial lens.

For gender, the company had initially chosen only the word “confidence” to track, but for race, it needed to build another tool to analyze the presence of several words and phrases that could represent bias, and Wayfair’s Performance Equity Task Force was created to formulate the steps needed to build a tool to analyze racial bias in performance reviews. Out of the task force emerged racial equity training that helped team members understand how language could be biased. The word “analytic” came up often for Black employees.

“Out of context, it’s like, ‘Well, what about it?’ Schmiedl says. “But in context for a particular group, it could be significant. If I don’t believe that that group is particularly analytical, then I think I’m giving you specific feedback to ‘use more analytics’ or ‘be more analytical.’ But if that feedback is coming to me, I am not entirely certain what you mean by that. And I’m also not clear that I’m not doing those things; maybe I’m just not doing it to your standard.”

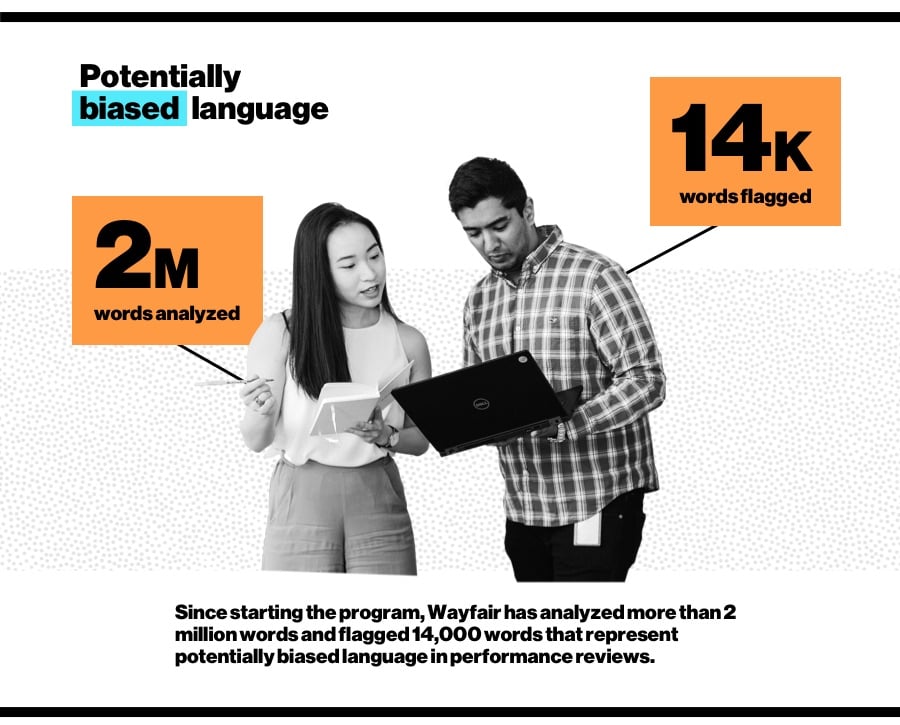

The company uses bias analysis to flag instances of bias in all its performance reviews, and using this information, participants on the task force are able to drill down and remove bias in the reviews. Since starting the program, the company has analyzed more than 2 million words and flagged 14,000 words that represent potentially biased language in performance reviews. As a result, Wayfair says that it has seen a decrease in bias associated with underrepresented racial and ethnic groups, gender and LGBTQIA status.

“It’s all because we’re asking people to create that moment of pause where they can then apply that learning,” Schmiedl says.